A new safety layer aims to help robots sense people in real time without slowing production

Updated

February 13, 2026 10:44 AM

An industrial robot in a factory. PHOTO: UNSPLASH

Algorized has raised US$13 million in a Series A round to advance its AI-powered safety and sensing technology for factories and warehouses. The California- and Switzerland-based robotics startup says the funding will help expand a system designed to transform how robots interact with people. The round was led by Run Ventures, with participation from the Amazon Industrial Innovation Fund and Acrobator Ventures, alongside continued backing from existing investors.

At its core, Algorized is building what it calls an intelligence layer for “physical AI” — industrial robots and autonomous machines that function in real-world settings such as factories and warehouses. While generative AI has transformed software and digital workflows, bringing AI into physical environments presents a different challenge. In these settings, machines must not only complete tasks efficiently but also move safely around human workers.

This is where a clear gap exists. Today, most industrial robots rely on camera-based monitoring systems or predefined safety zones. For instance, when a worker steps into a marked area near a robotic arm, the system is programmed to slow down or stop the machine completely. This approach reduces the risk of accidents. However, it also means production lines can pause frequently, even when there is no immediate danger. In high-speed manufacturing environments, those repeated slowdowns can add up to significant productivity losses.

Algorized’s technology is designed to reduce that trade-off between safety and efficiency. Instead of relying solely on cameras, the company utilizes wireless signals — including Ultra-Wideband (UWB), mmWave, and Wi-Fi — to detect movement and human presence. By analysing small changes in these radio signals, the system can detect motion and breathing patterns in a space. This helps machines determine where people are and how they are moving, even in conditions where cameras may struggle, such as poor lighting, dust or visual obstruction.

Importantly, this data is processed locally at the facility itself — not sent to a remote cloud server for analysis. In practical terms, this means decisions are made on-site, within milliseconds. Reducing this delay, or latency, allows robots to adjust their movements immediately instead of defaulting to a full stop. The aim is to create machines that can respond smoothly and continuously, rather than reacting in a binary stop-or-go manner.

With the new funding, Algorized plans to scale commercial deployments of its platform, known as the Predictive Safety Engine. The company will also invest in refining its intent-recognition models, which are designed to anticipate how humans are likely to move within a workspace. In parallel, it intends to expand its engineering and support teams across Europe and the United States. These efforts build on earlier public demonstrations and ongoing collaborations with manufacturing partners, particularly in the automotive and industrial sectors.

For investors, the appeal goes beyond safety compliance. As factories become more automated, even small improvements in uptime and workflow continuity can translate into meaningful financial gains. Because Algorized’s system works with existing wireless infrastructure, manufacturers may be able to upgrade machine awareness without overhauling their entire hardware setup.

More broadly, the company is addressing a structural limitation in industrial automation. Robotics has advanced rapidly in precision and power, yet human-robot collaboration is still governed by rigid safety systems that prioritise stopping over adapting. By combining wireless sensing with edge-based AI models, Algorized is attempting to give machines a more continuous awareness of their surroundings from the start.

Keep Reading

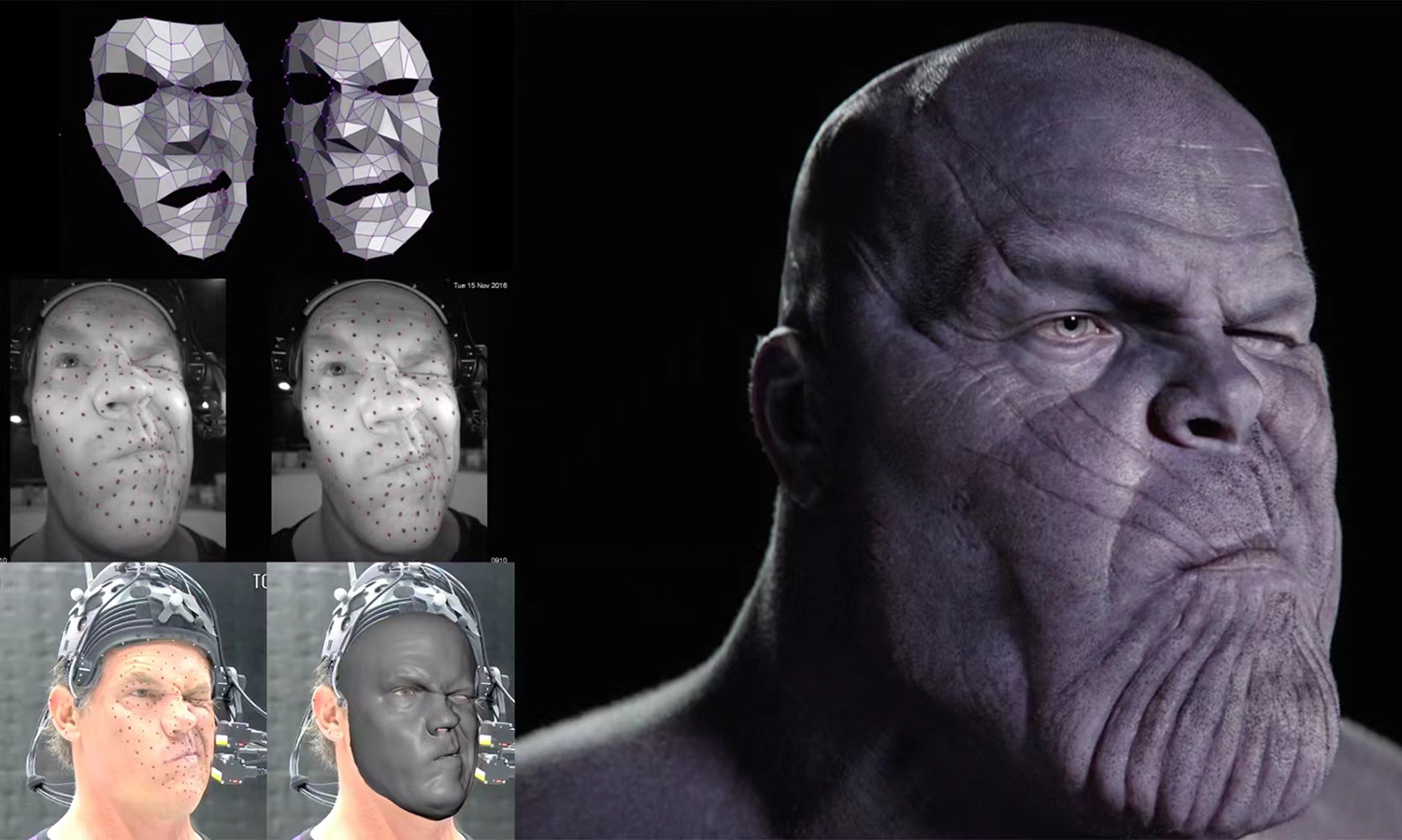

Where Hollywood magic meets AI intelligence — Hong Kong becomes the new stage for virtual humans

Updated

February 7, 2026 2:18 PM

William Wong, Chairman and CEO of Digital Domain. PHOTO: YORKE YU

In an era where pixels and intelligence converge, few companies bridge art and science as seamlessly as Digital Domain. Founded three decades ago by visionary filmmaker James Cameron, the company built its name through cinematic wizardry—bringing to life the impossible worlds of Titanic, The Curious Case of Benjamin Button and the Marvel universe. But today, its focus has evolved far beyond Hollywood: Digital Domain is reimagining the future of AI-driven virtual humans—and it’s doing so from right here in Hong Kong.

.jpg)

“AI and visual technology are merging faster than anyone imagined,” says William Wong, Chairman and CEO of Digital Domain. “For us, the question is not whether AI will reshape entertainment—it already has. The question is how we can extend that power into everyday life.”

Though globally recognized for its work on blockbuster films and AAA games, Digital Domain’s story is also deeply connected to Asia. A Hong Kong–listed company, it operates a network of production and research centers across North America, China and India. In 2024, it announced a major milestone—setting up a new R&D hub at Hong Kong Science Park focused on advancing artificial intelligence and virtual human technologies. “Our roots are in visual storytelling, but AI is unlocking a new frontier,” Wong says. “Hong Kong has been very proactive in promoting innovation and research, and with the right partnerships, we see real potential to make this a global R&D base.”

Building on that commitment, the company plans to invest about HK$200 million over five years, assembling a team of more than 40 professional talents specializing in computer vision, machine learning and digital production. For now, the team is still growing and has room to expand. “Talent is everything,” says Wong. “We want to grow local expertise while bringing in global experience to accelerate the learning curve.”

Digital Domain’s latest chapter revolves around one of AI’s most fascinating frontiers: the creation of virtual humans.

These are hyperrealistic, AI-powered virtual humans capable of speaking, moving and responding in real time. Using the advanced motion-capture and rendering techniques that transformed Hollywood visual effects, the company now builds digital personalities that appear on screens and in physical environments—serving in media, education, retail and even public services.

One of its most visible projects is “Aida”, the AI-powered presenter who delivers nightly weather reports on the Radio Television Hong Kong (RTHK). Another initiative, now in testing, will soon feature AI-powered concierges greeting travelers at airports, able to communicate in multiple languages and provide real-time personalized services. Similar collaborations are under way in healthcare, customer service and education.

“What’s exciting,” says Wong, “is that our technologies amplify human capability, helping to deliver better experiences, greater efficiency and higher capacity. AI-powered virtual humans can interact naturally, emotionally and in any language. They can help scale creativity and service, not replace it.”

To make that possible, Digital Domain has designed its system for compatibility and flexibility. It can connect to major AI models—from OpenAI and Google to Baidu—and operate across cloud platforms like AWS, Alibaba Cloud and Microsoft Azure. “It’s about openness,” says Wong. “Our clients can choose the AI brain that best fits their business.”

Establishing a permanent R&D base in Hong Kong marks a turning point for the company—and, in a broader sense, for the city’s technology ecosystem. With the support of the Office for Attracting Strategic Enterprises (OASES) in Hong Kong, Digital Domain hopes to make the city a creative hub where AI meets visual arts. “Hong Kong is the perfect meeting point,” Wong says. “It combines international exposure with a growing innovation ecosystem. We want to make it a hub for creative AI.”

As part of this effort, the company is also collaborating with universities such as the University of Hong Kong, City University of Hong Kong and Hong Kong Baptist University to co-develop new AI solutions and nurture the next generation of engineers. “The goal,” Wong notes, “is not just R&D for the sake of research—but R&D that translates into real-world impact.”

The collaboration with OASES underscores how both the company and the city share a vision for innovation-led growth. As Peter Yan King-shun, Director-General of OASES, notes, the initiative reflects Hong Kong’s growing strength as a global innovation and technology hub. “OASES was set up to attract high-potential enterprises from around the world across key sectors such as AI, data science, and cultural and creative technology,” he says. “Digital Domain’s new R&D center is a strong example of how Hong Kong can combine world-class talent, technology and creativity to drive innovation and global competitiveness.”

Digital Domain’s story mirrors the evolution of Hong Kong’s own innovation landscape—where creativity, technology and global ambition converge. From the big screen to the next generation of intelligent avatars, the company continues to prove that imagination is not bound by borders, but powered by the courage to reinvent what’s possible.